Mars Habitat Builder

An LLM Powered Construction Robotic-Arm

Vision

We live in a world where we can put humanity on Mars in our generation. Everybody is solving the transportation problem; off-world habitation remains an unsolved problem.

We can solve this by building an AI robotic system that constructs habitats with blocks. The reason why we take an AI-based approach is due to the latency of communication between Earth and Mars, the system must operate autonomously.

Project Goal

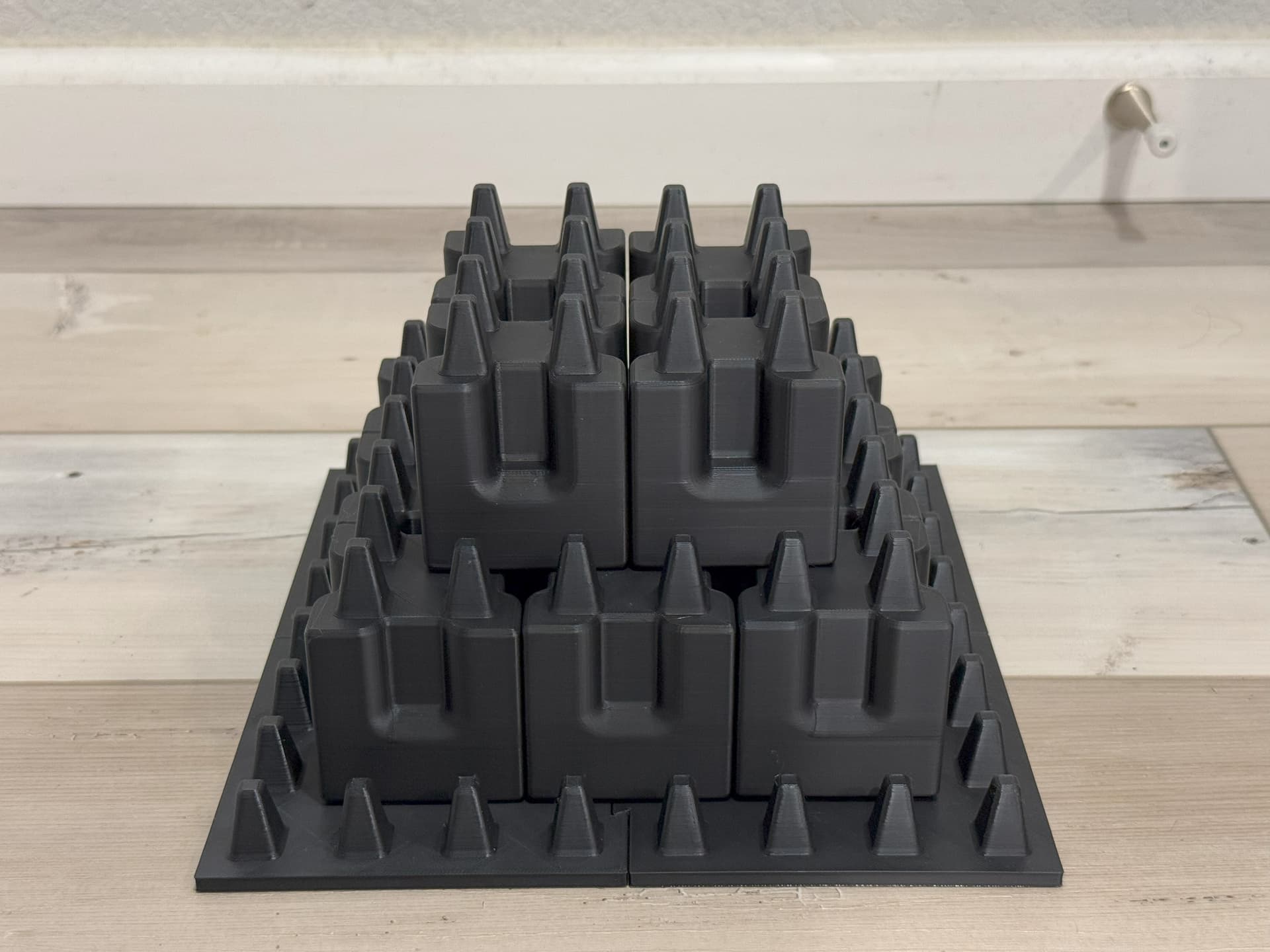

We demonstrate a robotic system that can autonomously build a Mars habitat outer shell structure, with purely vision inputs and no human in the loop. We do this by representing the outer shell to be built with custom 3D-printed blocks.

Our workflow consists of inputting a text description of the desired structure (ex: 'build a tower'), as well as the available blocks' dimensions, into an LLM like ChatGPT. The LLM outputs an array of block positions and other metadata. We then use the LLM to determine the order of which to place the blocks.

Then, our system takes in this LLM output, and combines it with our vision system to begin moving the gripper end effector to construct the structure. The vision system is unique as we do not use AR tags to determine where individual blocks or the base frame are - we use various edge detection models instead. This was done to simulate the Martian environment, where we realistically won't have AR tags lying around.

Combining our GPT and vision outputs into our PID-based control system, we are able to pick and place blocks.

The end goal is to demonstrate that our system is able to repeatedly pick and place objects over time to construct the Mars habitat.

Real-World Applications

The applications for this system are not limited to Mars, it is useful on Earth. In dangerous environments (such as deep underground construction or mining) where it is unsafe for humans to operate equipment, our robotic system can be used to identify objects to construct or excavate. The key here is most robotic systems require a baseline understanding of how robots work, but not everybody has a technical background. As our system is a full-stack solution, needing only a natural language input, we enable more end users to use our product.

Moreover, consumer robotics is a growing market, and the robotic system can act as a housekeeper. With improvements on the natural language processing side, and rigourous testing on the controls side from a safety standpoint, the robotic arm system can help pick up objects (like trash) or sort objects on tables or hard to reach spcaes.

System Architecture

Overview of the Mars Habitat Builder system architecture

Initial block processing and metadata extraction

GPT placement and coordination system

Custom rendering and visualization output

Design

Success Criteria & Testing Approach

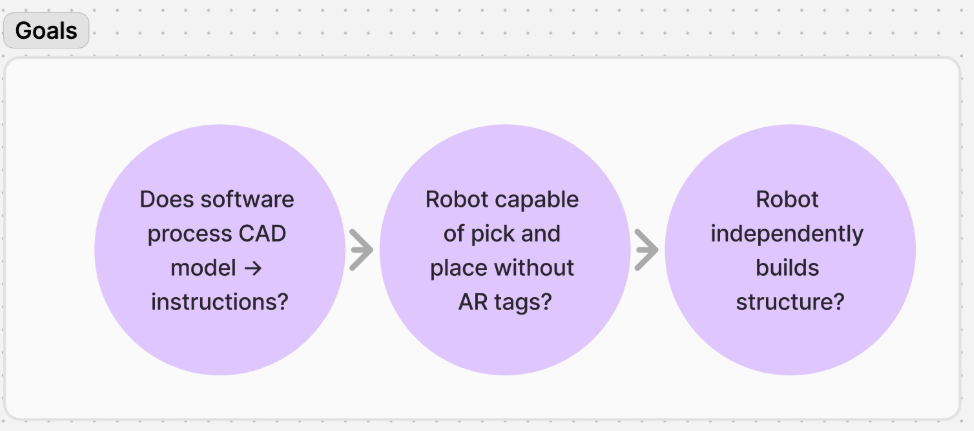

Our project success is measured across three parallel testing areas:

- Software Processing: Verify accurate conversion of CAD models into robot instructions

- Vision System: Demonstrate block recognition and pickup without ARUCO codes

- Autonomous Construction: Validate independent structure building with correct block placement

Success Levels

- Base Success: Fully functional system with square blocks and single-level structures

- Advanced Success: Extended functionality supporting multi-level structural depth

Reach Goals

- Lego block compatibility for complex structures with hanging edges

- Support for non-square block geometries

- Alternative input methods (e.g., hand-drawn sketches)

- Handling of smaller-scale blocks

- Multi-rotational block placement capabilities

- Support for increased block weights

- Dynamic block selection from non-fixed layouts

Design Approach

We chose a modular system architecture that separates concerns between vision processing, LLM integration, and robotic control. This design allows for independent testing and optimization of each component while maintaining system cohesion.

Design Trade-offs

Key trade-offs in our design process included:

- Choosing computer vision over AR tags for more realistic deployment scenarios, despite increased complexity

- Implementing PID control for reliability over more complex control systems

- Using standardized block shapes to simplify manipulation while limiting structural variety

Engineering Application Impact

Our design choices significantly impact the system's real-world applicability:

- Robustness: Vision-based detection allows operation in varying lighting conditions

- Durability: Modular block design enables easy component replacement and maintenance

- Efficiency: Optimized movement patterns reduce construction time and energy usage

- Adaptability: LLM integration allows for flexible structure specifications