Hardware

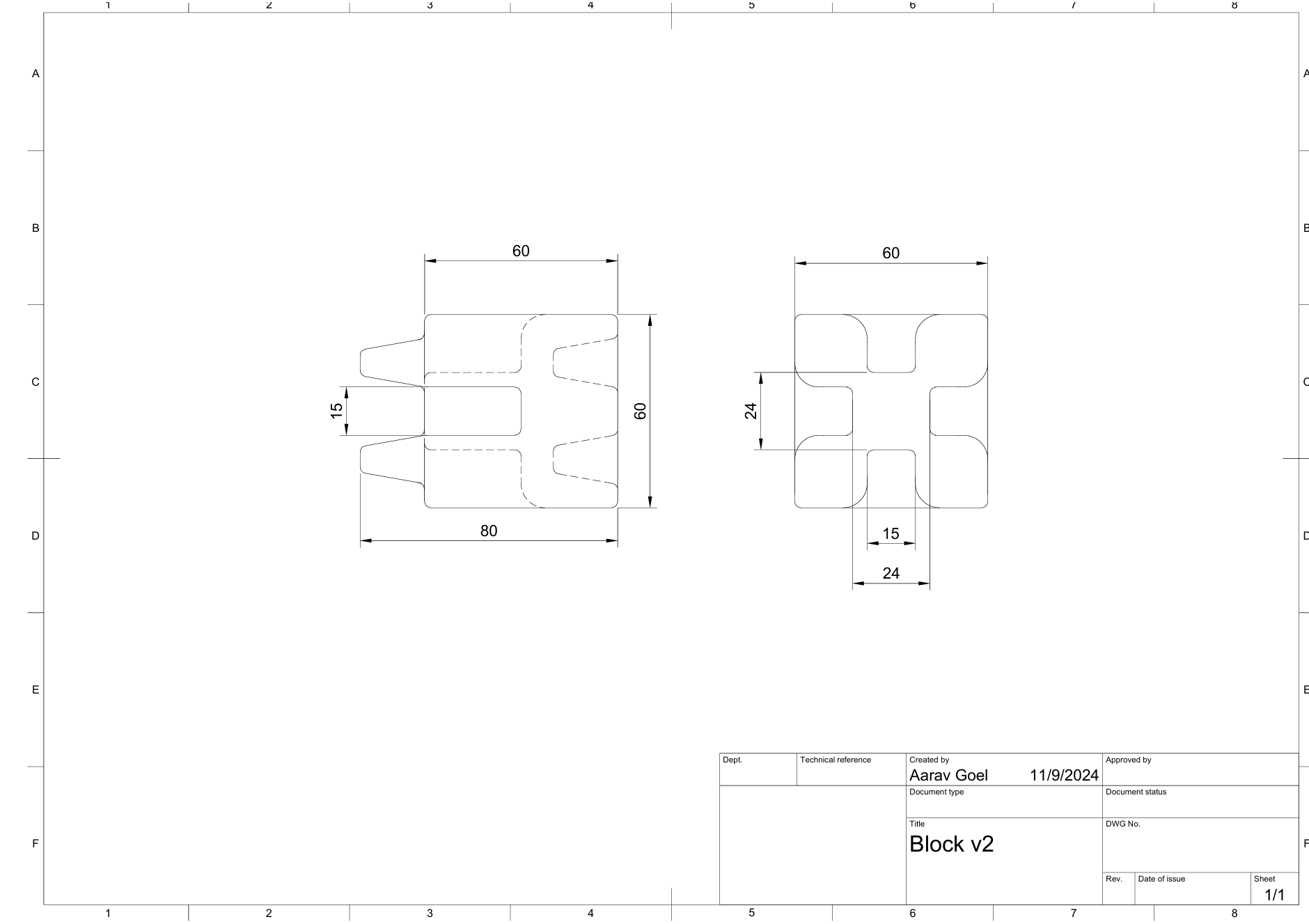

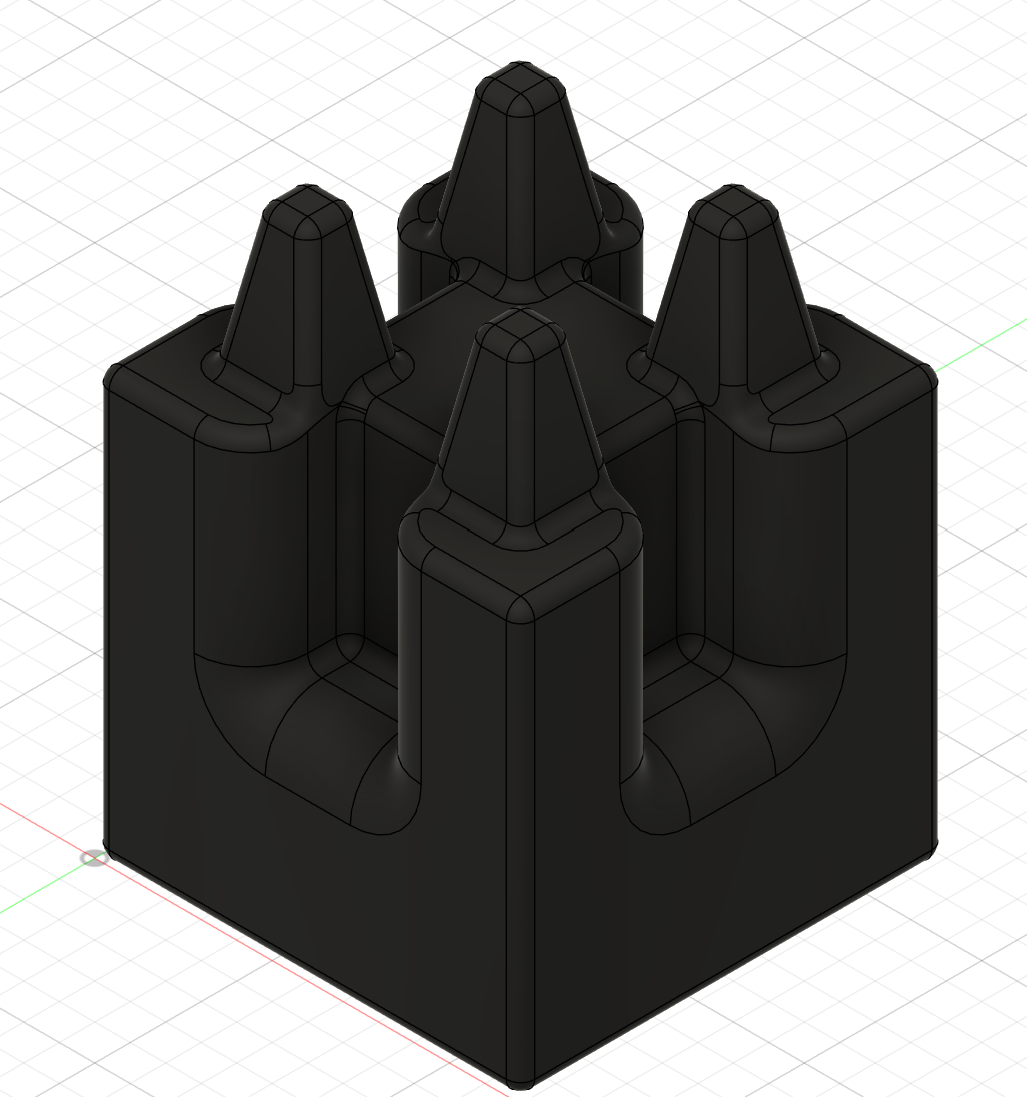

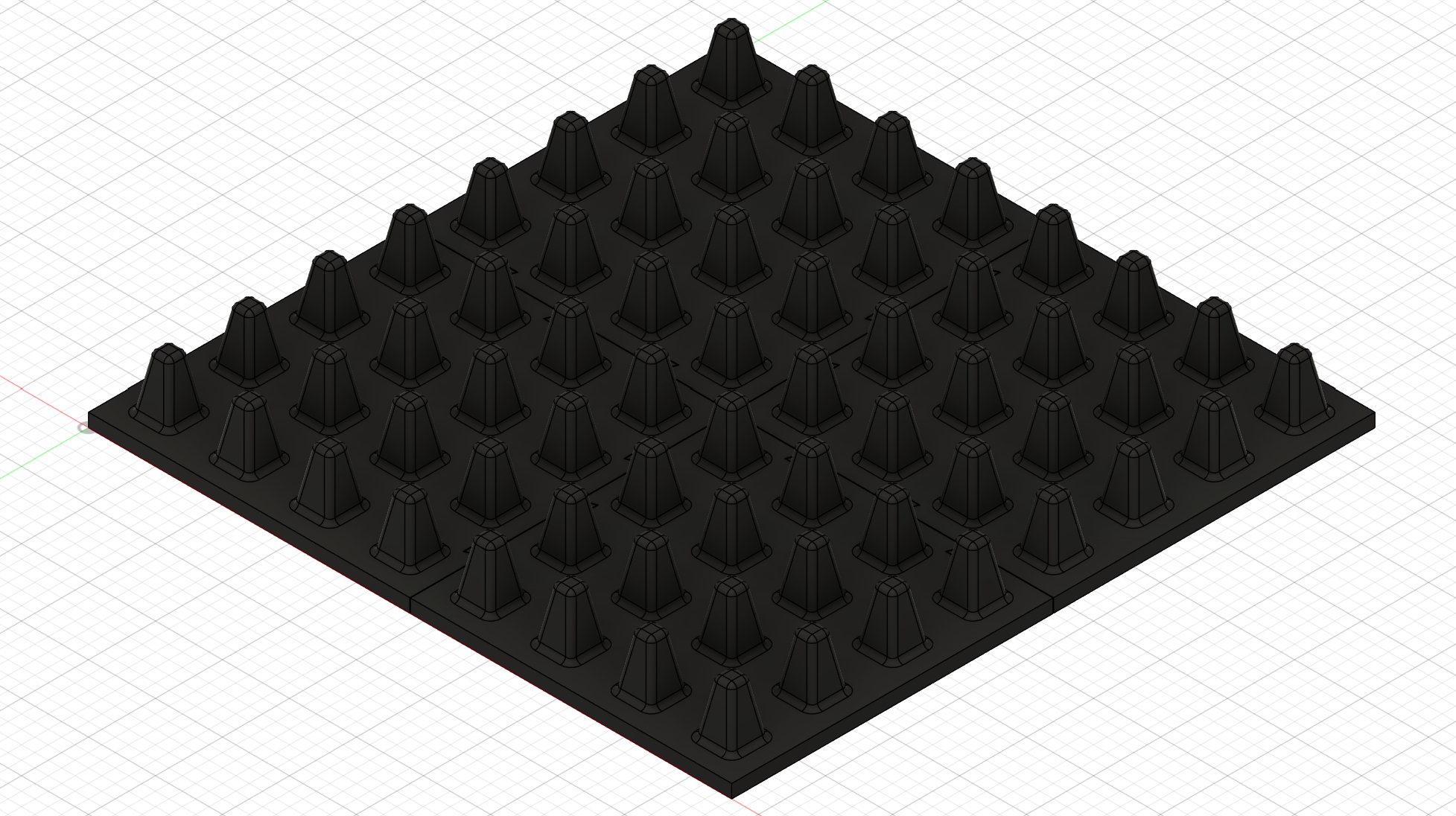

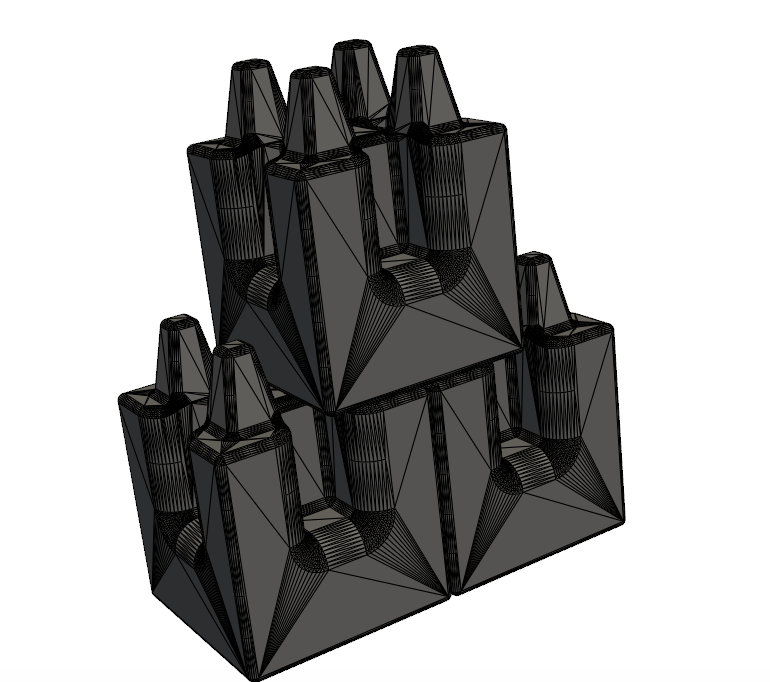

Block Design

We designed and 3D printed two (2) main components: the block base plate and the block. The blocks interlocking mechanism was similar to Lego's, but optimized for the robotic arm. It features side grooves for guiding the gripper to the correct spot. We also tapered the prongs so the blocks will catch and interlock even if there is some small positional error.

ChatGPT

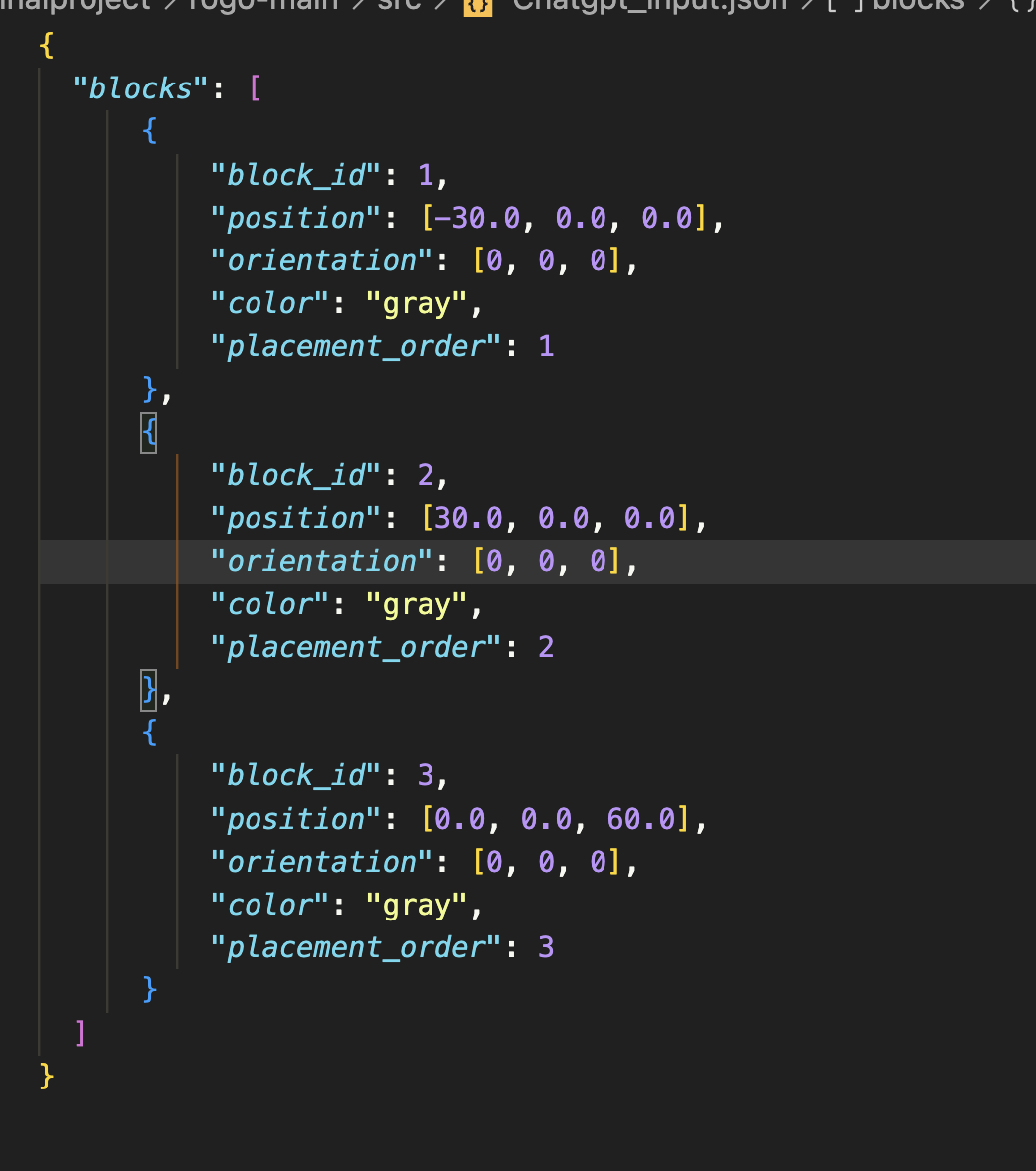

Block Position Output

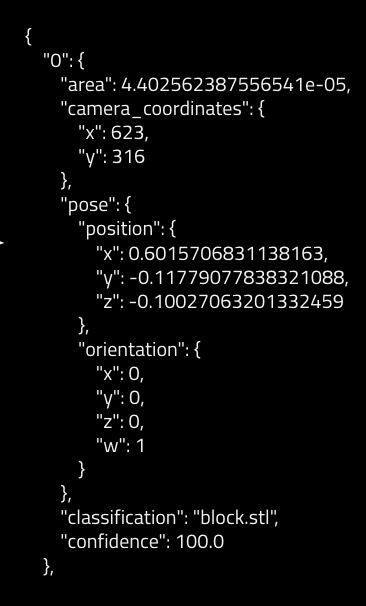

After the user inputs their structure instructions (i.e. "build a tower", "design the Taj Mahal"), ChatGPT takes the constraints into consideration (block dimensions, prongs don't add to structure height since they insert into the block above) and outputs coordinates based on those constraints and the user input.

ChatGPT also outputs information on what order to place the blocks (i.e. in a pyramid structure, you must place the blocks with lower Z-values first).

Below is an example of the JSON ChatGPT outputs:

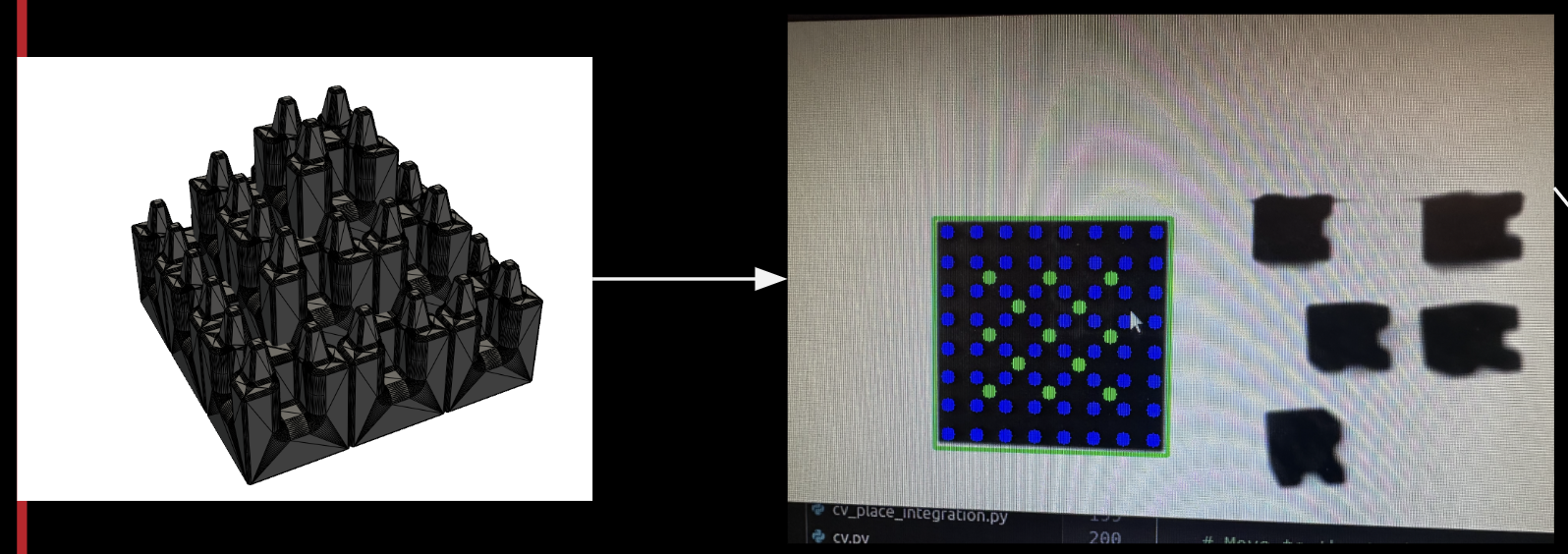

Furthermore, we use a script to render ChatGPT's output JSON into an image:

Computer Vision

Detecting Blocks

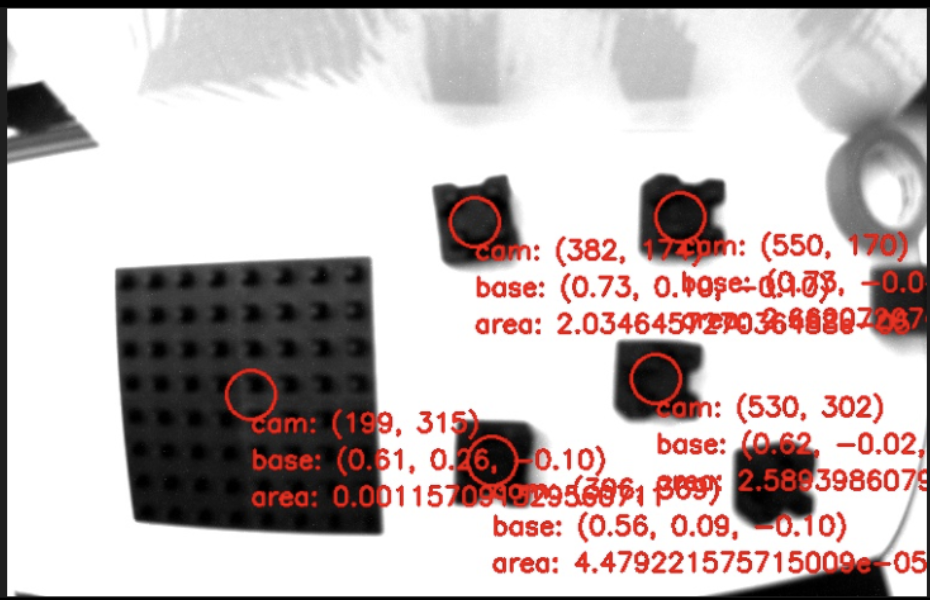

Using Canny edge detection, we were able to distinguish objects from background, even with the grayscale image that the robot wrist hand camera gave us:

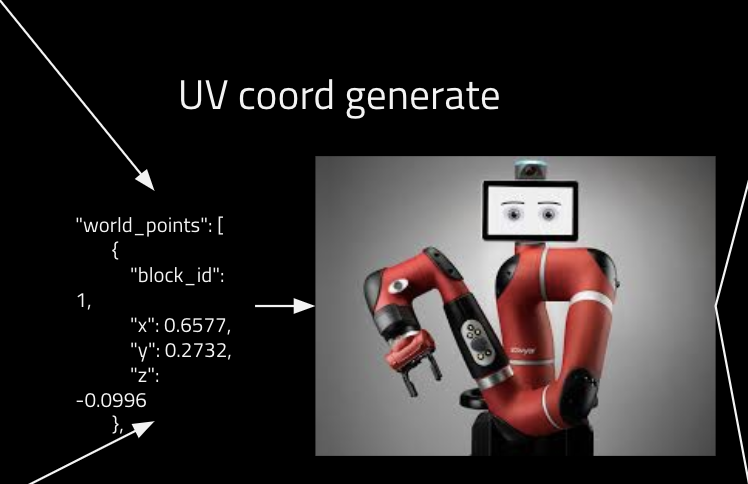

We then used said block center coordinates, and transformed from u,v to real world x,y,z using camera intrinsic properties

Distinguishing Blocks From Baseplate

We distinguished the base plate from the blocks using a surface area calculator, by taking all four edge corners in the real world (transformed) and figuring out the quadrilateral area and setting a certain threshold to determine baseplate vs. blocks vs. noise

Generating placement instructions

Given real world projected locations of baseplate + various building blocks, we were able to generate placing instructions for which blocks went where

PID/Controls

We used PID to move to said predicted real-world locations from CV. We used K/gain values that are known to work with the Sawyer arm and allow it to move smoothly and correct its trajectory.