Results

Final Outcomes

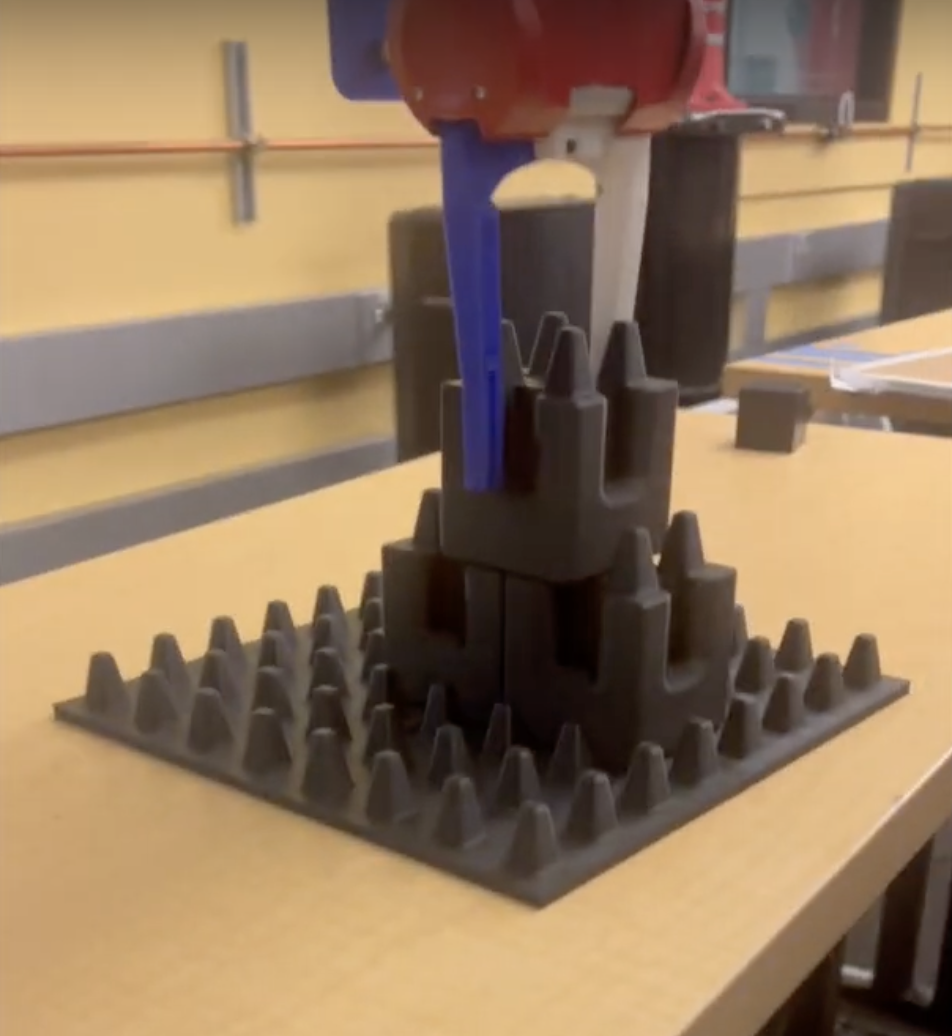

Our system is able to build a 2x1 pyramid structure, with only tiny (millimeter magnitude) manual adjustments needing to be made in order for the blocks to placed correctly.

The below picture shows the arm releasing the last block of the 2x1 structure:

After our initial testing and tuning was completed, our system was able to accurately detect and pick up blocks successfully (without human intervention) 85% of the time.

After picking the block up, the block placement portion had a slightly lower success rate at 70% of the time, since it needed to take into account the position of the blocks already placed.

The team was happy with this success rate, given our current limitations.

Future Improvements

Upgrade Camera Setup

In an attempt to build an entirely self-contained robotic arm that could sense and build without needing extra equipment (who would set up the tripod on Mars!?), we made the design choice to only rely on the Sawyer's wrist camera to detect the blocks.

Due to the low resolution of this camera, we encountered many difficulties detecting the blocks. We narrowed the problem down to the camera resolution by testing the CV algorithm on our laptops, where the blocks were detected with much higher accuracy.

In future iterations, we could choose a robotic arm with higher quality built-in cameras, or opt for a tripod setup that overlooks the workspace.

Ability to Deal with Variety of Block Designs

Our system currently accounts for one type/dimension of block (see Hardware section on the implementation page).

To build an ideal Mars habitat, we would print many different dimensions of blocks. To accomodate this, we would change our block detection algorithm to not only detect where blocks are in the workspace, but classify which block type it is as well.

Obscure Inputs

The ChatGPT workflow was designed to only take in text input. We believe allowing for obscure inputs such as CAD models (i.e. stl files) and even hand-drawn structures would expand the robot's use case considerably.

To accomplish this, we need an algorithm to analyze the hand-drawn drawing and provide the best-fitting CAD model. We would also create an algorithm to deal with CAD model inputs by analyzing the .stl file and outputting a JSON with block coordinates.

Note: Considering how unlikely it is for ChatGPT to provide the user's exact desired structure on the first try when using a hand-drawn picture, it would be best to enable a back and forth conversation between the user and ChatGPT to iterate designs until the user is satisfied!

Having these two algorithms would allow us to handle text, hand-drawn pictures, and CAD models and convert them all to the same object type, a JSON file.

Non-Planar Layout

Our current implementation assumes the blocks are laying on the same Z-plane and are moderately separated from one another. In a real world scenario, this may not always be the case (who knows how organized our materials will be on Mars!)

To account for this scenario, our wrist camera can scan the entire workspace by performing a serious of rotations and moving across the scene until it captures all angles of the workspace. As the camera sees new blocks, it approximates its location and saves it to the unplaced_blocks[n] list.